This article uses the productivity framework introduced in previous posts to provide valuable insights for running your project in the IDP domain.

The Ultimate Goal

The hype about AI is as high as it could get; however, IDP solutions are not all about AI.

The only goal of an IDP solution is to improve human productivity. However, doing so comes with costs, and the return on these investments(costs) are very different depending on the problem you are prioritizing to solve.

Once improving human productivity becomes the guiding principle, you might prioritize very different objectives for your IDP project. For example, you might elect to enhance human productivity by building a better user experience, not necessarily intelligent AIs for touchless processing or hyper-focusing on AI accuracy results. Don’t get me wrong. I enjoy building sophisticated AI models and complicated multi-layer extraction algorithms. But if you lose sight of the ROI, you might not prioritize the correct features/user stories/requirements. This article attempts to bring home these concepts with a real case, a one-and-a-half-long-year project for a solution meant to process 18 million documents a year.

Productivity stats from an existing case

As you may remember from the previous post, the productivity improvement in the new solution was hovering about 79% for my client. So let’s break down the improvement module by module and see where we got the best ROI for the money we spent.

If you need a reminder of what each module does, please review The typical workflow section here.

The table below shows the contribution of each module to the overall productivity.

| Module Name | Improvement |

| Document Review | 9% |

| Validation 1 | 10% |

| Validation 2 | 38% |

| Quality Control | 22% |

| Total | 79% |

Document review ROI

Any improvement in building classifier results in documents either bypassing the document review module or humans spending less time doing work in this module. We spent around three months building multiple classification and separation models. The improvement in accuracy was staggering, where overall recall jumped from 40% to 90% while maintaining a 98% accuracy. Yet, as a whole, it only contributed 9% of the 79% improvement. Three resources worked for three months around the clock with fantastic success, yet the overall contribution of the classification and separation AI was a 9% improvement.

So let us calculate the ROI for this module; I assumed an average 100K salary for solution developers and an average of 30K for the wages of human operators. Also, the document services department of my customer employs 40 people dedicated to this specific solution/system.

ROI = (Productivity Improvement * Total Wages) / Project Cost

(9% * 40 people * $30,000 ) / (3/12 months * 3 people* $100,000 Salary) = 144%

For every dollar we spent building the classification models, we get back $1.44 in a year. It beats the average market returns even in the era of never-ending money printing by central banks.

Quality Control ROI

Quality control is a module that all the exceptions are routed to. There could be many reasons why a document would end up here.

For this leg of the project, only two resources worked and, in total, spent one-month building rules and logic in various modules to reduce the cases that go to Quality Control. Document review rules, shortcuts in Validation 1 and Validation 2 modules for reclassifying documents while maintaining the integrity of logical fields, etc., were among these improvements. The insights from these improvements were gathered as part of visiting the document center, talking to end-users and reviewing end user’s documented procedures; A complete human-centric approach based on design thinking principles.

These simple rules and flows contributed an impressive 22% to human productivity.

Let’s calculate the ROI for the Quality Control module:

(22% * 40 people * $30,000) / (1/12 month * 2months * $100,000) = 1584%

This is an excellent return by any measure. Almost as good as Crypto market returns during a bull run!

Validation 1 ROI

This module is another great example of realizing human productivity. Currently, my client’s extraction AI is deployed as AI assistance. As a result, there is almost no difference between the extraction accuracy of fields reviewed in this module vs the old solution. So a wise person would ask, what’s behind the 10% productivity contribution? And the answer is focusing on the user experience of batch loading! We knew firsthand from our end users that they were susceptible to batch loading (closing the current batch and opening the next batch). So we focused on engineering all the codes executed in batch close and batch open events and optimize the performance. One such example was changing on-disk accesses to in-memory operations. How much did we spend re-engineering the Validation 1 code? About one week of 1 person. How did the user perceive the impact of the new data structure? Instantaneous batch close and opens. They were so excited about this; given their past trauma with the user experience, they closely monitored the speed as we went from UAT to Prod environment during the go-live period.

Let’s calculate the ROI again:

(10% * 40 * $30,000) / ( 1/52 week * 1 person * $100,000) = 6240%

I know what you are thinking. But, unfortunately, it’s hard to generate these types of returns in any market, even with insider information. I can’t agree more!

Validation 2 ROI

Since the AI for extraction is deployed as AI assistance, users must manually review all the fields, and the AI’s confidence is completely ignored at the moment. We had spent an extra nine months (3 resources * 3) to build Partial automation. An essential task in building partial automation for most IDP solutions is reducing false positives to an absolute zero for most fields. It’s too early to say how terrible the ROI for building partial automation is. Still, my gut feeling is that if we had targeted AI assistance as the final state for extraction, the ROI would have been significantly higher than building Partial automation. Once the extraction AI is redeployed as partial automation, we can have a more accurate ROI.

But for now let’s calculated the ROI for the current AI assistant state:

(38% * 40 * $30,000) / (3/12 * 3 people * $100,000) = 600%

Recommendation based on ROI

Here is the summary of the improvements based on ROI

| Module Name | ROI | Area of Focus |

| Validation 1 | 6240% | Focusing on the better user experience of batch loading |

| Quality Control | 1584% | Focusing on the user experience of reclassification, doc review rules, etc |

| Validation 2 | 600% | Extraction AI |

| Doc Review | 144% | Clasification and Separation AI |

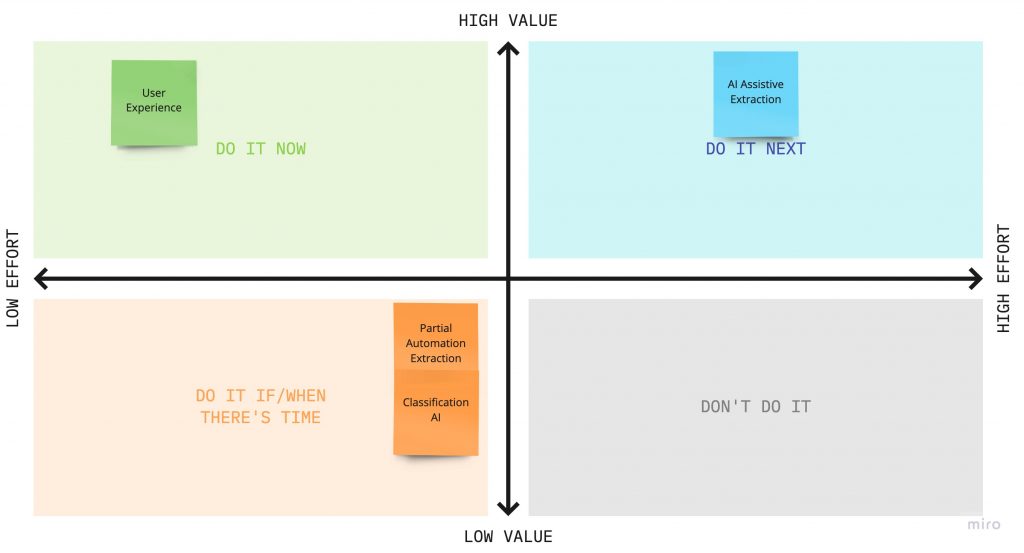

Here is a prioritization matrix based on the ROIs in this case:

Finally, here is my recommendation based on the ROIs mentioned above sorted by the order of importance.

Focus on user experience

- Optimize your code for efficinecy as much as possible.

- Focus on building the most seamless exception handling.

- Build validation rules, formatters and validation forms with best practices to lower cognitive load.

Extraction

- Deliver the project in AI assistance. If you are not using low code services such as AWS Textract for extraction, defer building extraction to a second phase.

Classification and Separation

- Only build classification and separation AI if you have exhausted all the previous opportunities.